NutriSight, making nutritional information more accessible

Nutrition is one of the key indicators of our diet. Some people will focus on certain indicators in particular: sugar or carbohydrates, calories or fat, depending on their concerns. The Nutri-Score stands out because it attempts to give a global and balanced overview by combining it with other elements.

Fortunately, in most countries, nutrition information is compulsory on processed food packaging.

Nutrition, vital information for our food choices

However, if we want to be able to compare foods with each other, calculate the Nutri-Score on products where it is absent, or monitor daily intakes, we need to have the nutritional information in a database. And this is precisely where the Open Food Facts project comes in.

But going from the information printed on the packaging to the information in the database is no mean feat, and requires several minutes of concentrated effort on your phone. Multiply this effort by 3.7 million products, add in the fact that this information often changes, and you’ll understand that even for a project like Open Food Facts with many motivated contributors, it remains quite a challenge.

Accelerating access to this information

Fortunately, image recognition techniques can help. This is what the Open Food Facts Artificial Intelligence team had been thinking for some years now. Unfortunately, several attempts by volunteers have failed. The problem could not be solved so simply. Between the diversity of languages, formats, image quality, curved packaging and so on, the task was much harder than expected.

But the DRG4Food programme, funded by the European Commission, gave us the opportunity to change all that. This programme, to which we submitted our project NutriSight, gave us the opportunity to develop a new model based on recent advances in the field of image recognition.

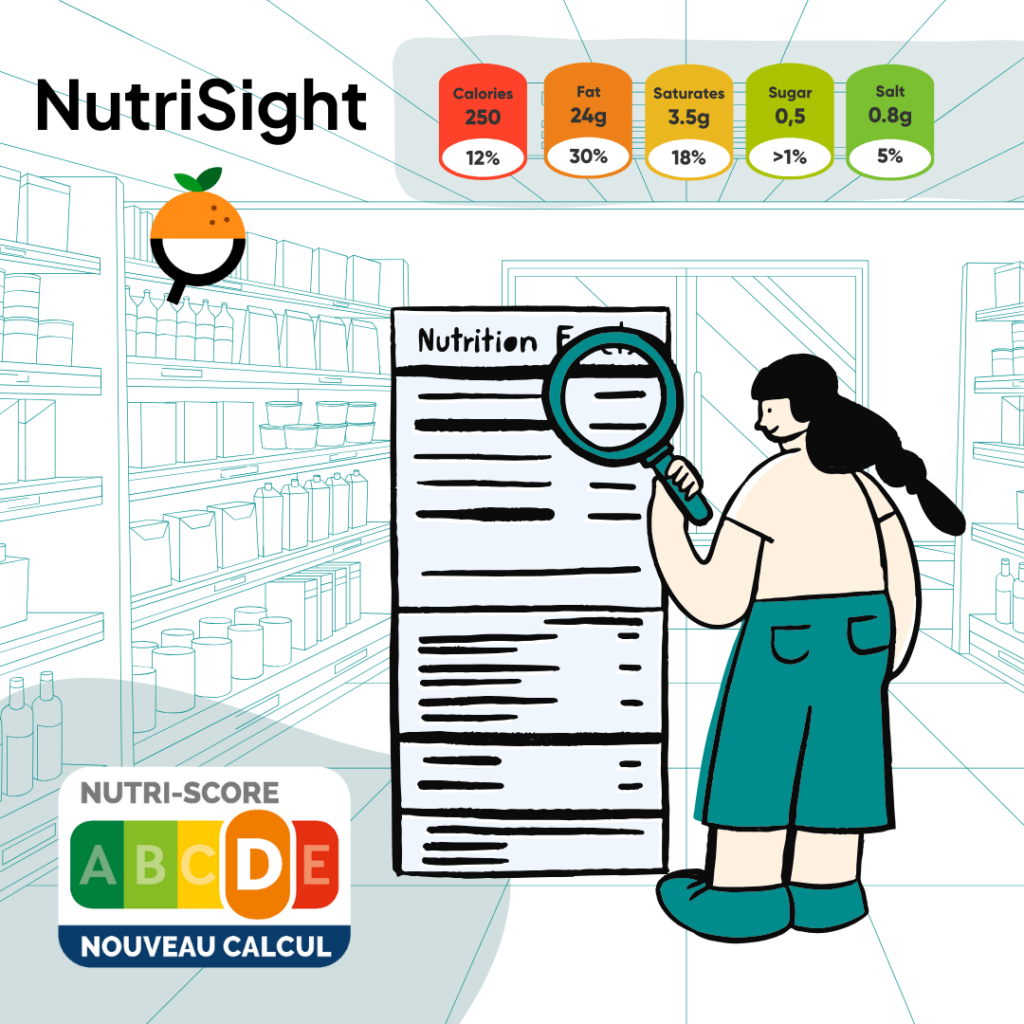

In collaboration with the GoCoCo application, which uses data from our open database, we annotated numerous images and then developed a model capable of extracting nutrition data with a high degree of accuracy.

Of course, in the spirit of our project, all this is Open Source, from the training data, to the model and the code to deploy it.

Thanks to this new tool, we hope to be able to increase and update information on many food products. This will increase transparency, supporting more informed choices in the interests of health.

How to get involved

✔️ If you are a user of the Open Food Facts mobile app, you will soon be able to benefit from these prediction tools in the mobile application and on the website.

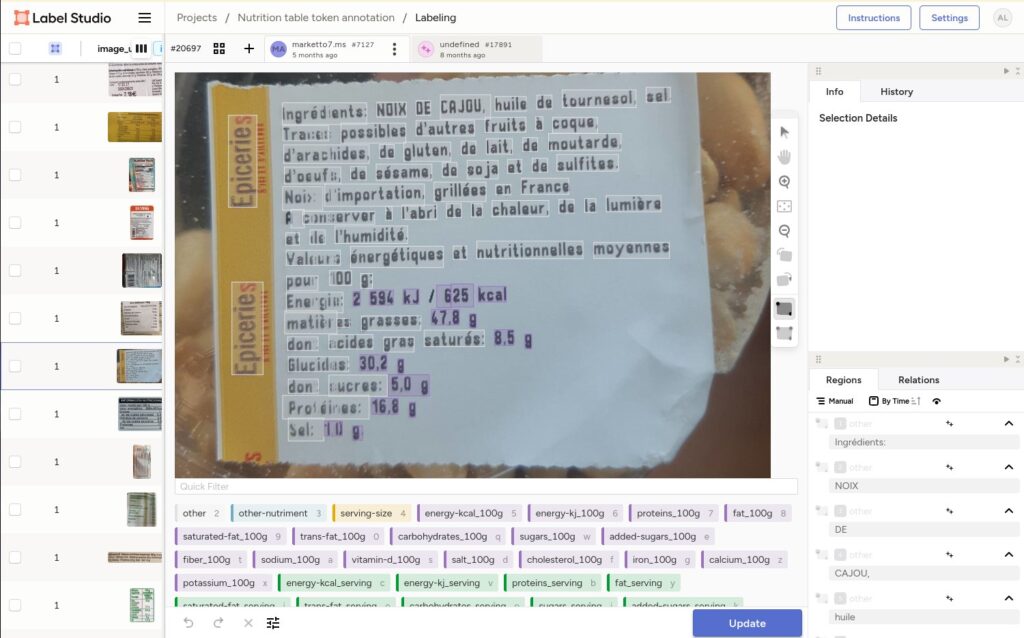

✔️ If you are a contributor to the database, you can even use the Hunger Games platform to quickly validate the nutritional information on a whole host of food products.

✔️ If you are a re-user of Open Food Facts data, you can integrate this model into your application using the dedicated robotoff API (see also the introduction) and possibly integrating the just-developed web-component to validate the information. You could even deploy the model on your own servers.

✔️ If you’re a data scientist, you might decide to help us improve the model, extend it to more data or make it available on mobile. You’ll certainly be interested in the model, the data or the training code.

Many thanks again to the DRG4Food project, funded by the European Commission, which has given us the opportunity to speed up the race towards greater transparency in food.

Comments: 1

[…] the first part of this Nutri-Sight blogpost series, we introduced Nutri-Sight, our AI-powered solution developed thanks to the DRG4Food programme, […]

Comments are closed.